GlacierDA

Decentralized Artificial Intelligence applications encounter a pressing challenge: ensuring data availability for large amounts of off-chain transactions in the AI ecosystem. With AI advancing rapidly, it deals with specific types of data with high-dimensional structure and matrix computation, requiring a data availability layer tailored for the AI environment.

GlacierDA provides a programmable, modular and scalable data availability service that integrates with decentralized storage for Rollups and AI which needs heavy and veritable data workload.

What is Data Availability (DA)

Typically, one blockchain can consist of four core-function layers: Execution, Settlement, Consensus, and Data availability. Modular solutions decouple these functions and concentrate on optimizing specific features, which will increase the total throughput and scalability of the whole system.

Leveraging advanced technical solutions such as data availability sampling, the Data Availability layer facilitates dynamic throughput by enabling light nodes to verify data availability efficiently without downloading all data. The innovation of the DA layer opens up new possibilities, ensuring reliable and efficient operations for millions of rollups and applications.

Problem Statement: Why GlacierDA

The data availability problem is a critical issue in blockchain, centering on the necessity to make all transaction data publicly accessible and verifiable across the network. As we all know, posting L2 transaction data to L1 requires high gas consumption and may cause congestion on L1. This situation becomes even worse as the L2 ecosystem prospers in the GenAI Era.

The promise and challenges of crypto + AI applications — Vitalik Buterin

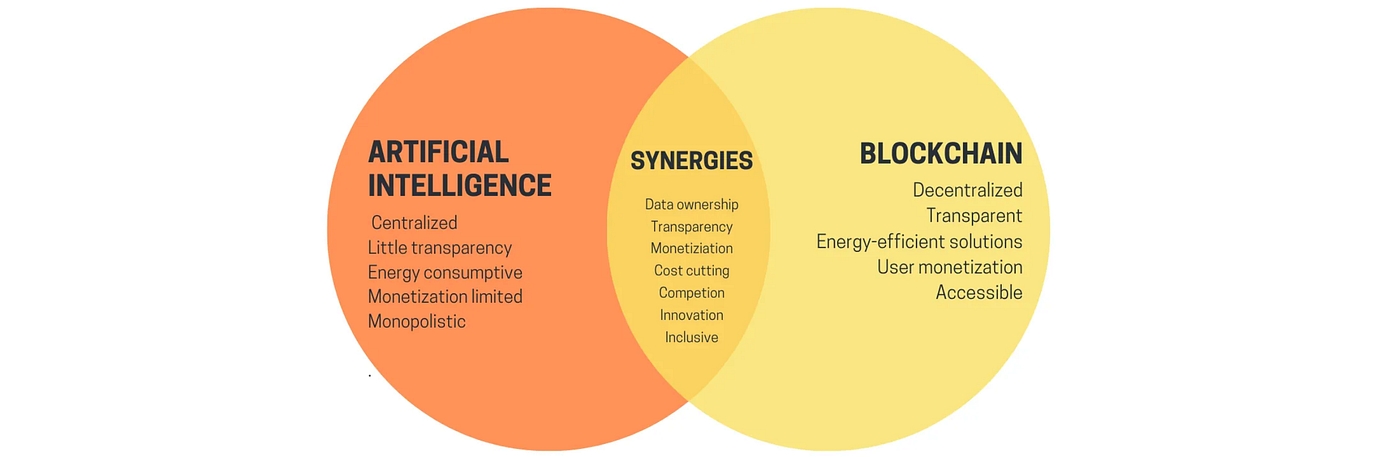

In Vitalik’s latest article, he discusses great promise in the synergy between AI and Crypto, and highlights one major objection: cryptographic overhead. Today, the most mainstream on-chain AI/ML approaches today are zkML and opML.

zkML(Zero-Knowledge Machine Learning) combines advanced machine learning techniques with cryptographic methods to ensure data privacy and security. opML(Optimistic Machine Learning) is another approach designed to run large machine learning models on chains efficiently. Both zkML and opML require a data availability layer that is efficient, secure, and capable of supporting the specific demands for Generative AI applications.

The Solution: GlacierDA

Glacier DA is the first data availability layer which modularly integrates different decentralized storage layers and data availability sampling networks, offering exceptional scalability and robust decentralization.

Decentralized data storage is an essential part of data availability because it must answer the question of where the data is published. GlacierDA integration with decentralized storages further enables to support a variety of availability data types from diversified scenarios not limited to Layer 2 networks but also inclusion of decentralized AI infrastructures.

Verifiable Computation: GlacierDA solves the demand for off-chain verification and computing of GenAI and DePIN executed states. It ensures that the network remains secure and decentralized by using a PoS consensus mechanism.

Cost-Effectiveness: GlacierDA is designed to be cost-effective for handling large datasets and maintaining data integrity. All the data in a block is available for validation utilizing GlacierDA sampling network, providing ultra-low costs for applications.

Scalability for GenAI: GlacierDA functions as a foundational (base) layer, offering scalable data hosting without transaction execution, specifically for rollups and GenAI which relies on heavy data workloads. It plays a key role in the synergy between AI and Crypto.

Permanent Storage: Permanent storage ensures that all parties can access the data at any time for verification, auditing, or compliance purposes. Decentralized data storage answers the question of where the data is published, crucial for maintaining trust and transparency.

Last updated