GlacierAI

Glacier VectorDB

Memory for Retrieval-Augmented Generation (RAG)

Glacier provided the data-centric network to handle datasets seamlessly and effortlessly with a Decentralized Vector Database on top of Arweave, Filecoin, and BNB Greenfield. The AI training process encompasses a multitude of matrix operations, ranging from word embedding and transformer QKV matrices to softmax functions. Serving as the foundation for constructing memory in Retrieval-Augmented Generation (RAG) models, the vector database emerges as a vital component in AI.

What is Glacier VectorDB

Glacier VectorDB is a decentralized vector database built on top of the decentralized storage stack, such as Arweave, BNB Greenfield and Filecoin. Its distributed data vectorization network empowers every web3 user to own, control and utilize their AI assets in a trustless and self-sovereign manner. Through its specifically crafted design, Glacier VectorDB seamlessly integrates into the AI ecosystem such as LangChain, enabling unparalleled data management capabilities:

Scalable Vector Management: Glacier Vector seamlessly expands to meet the growing demands of AI applications, capable of storing and managing vector data with efficiency.

LLM Framework Compatible: Tailored database solution optimized for seamless integration with Large Language Model (LLM) frameworks, ensuring efficient storage and retrieval of complex vector data.

Distributed Pipeline Automation: Utilizing blockchain-driven distributed network, DeVector ensures secure, independent and resilient operations for AI pipeline automation.

Composability and Modularity: The modular design, empowered by L2 rollup, enables seamless integration across diverse ecosystems, unleashing the potential of AI and Web3.

Architecture of Glacier VectorDB

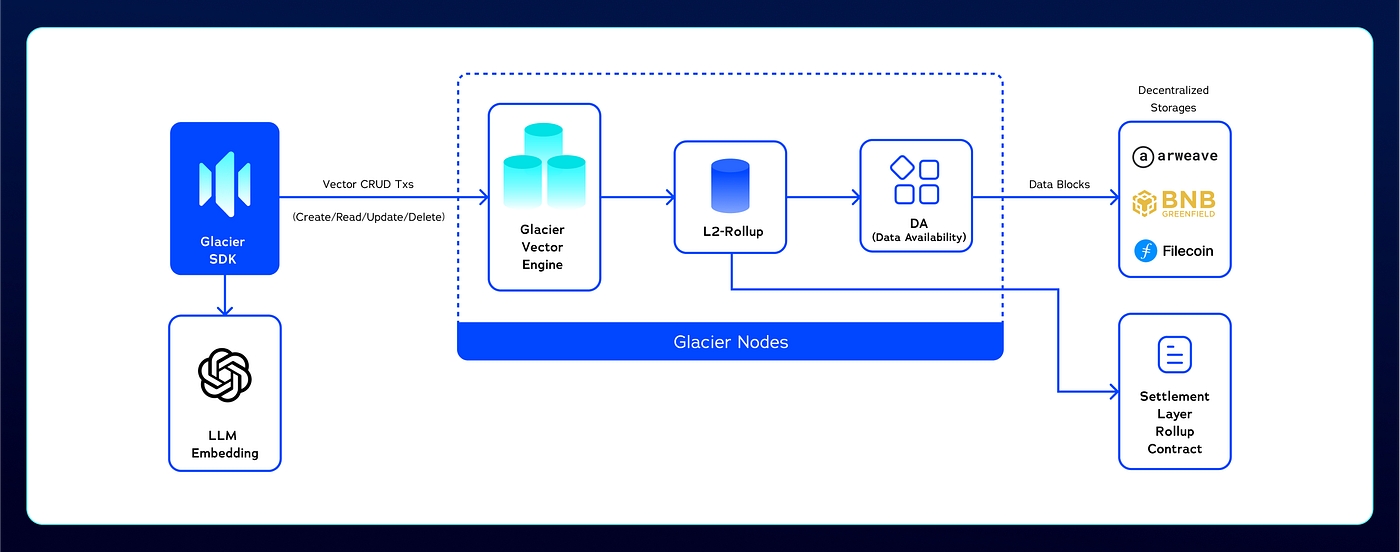

The dApp / dAgent (Decentralized Agent) makes use of the LLM embedding (eg. OpenAI ChatGPT-4) to convert the unstructured data into vectors and then store the vectors in the Glacier VectorDB via Glacier SDK.

After the Vector Tx operations enter the Glacier Vector Engine, the Engine verifies the signature of the Tx and then applies it to the table model of Vector Engine. At certain intervals, the Vector Engine generates a Data Block containing the Tx vector data from the past interval. The Data Block is then submitted to the Data Availability Network, which is responsible for ensuring that the data is available by storing the Data Block to the decentralized storage network. The Rollup module calculates the Data Proof based on the Data Block. The Data Proof and Storage Merkle proof will be submitted to the Settlement Layer contract.

VectorDB Integration with AI Ecosystem (LangChain)

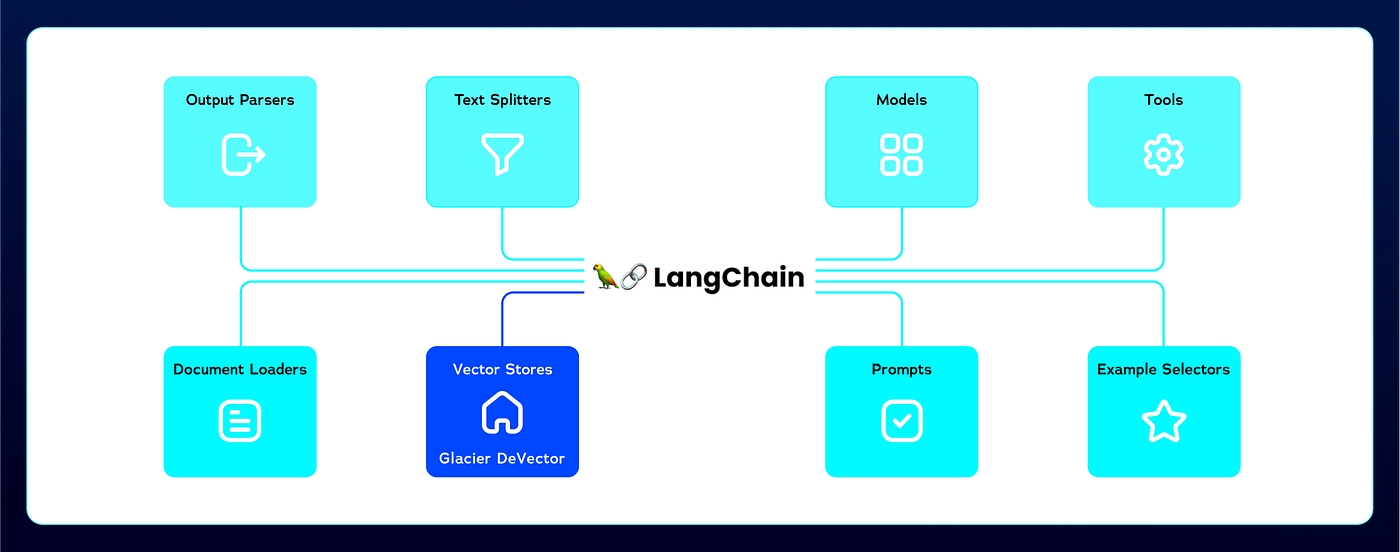

Glacier VectorDB bridges the gap between decentralized data stack and powerful AI ecosystems like LangChain, allowing users to effortlessly incorporate external context into their LLM interactions. This opens up exciting possibilities for exciting enhancements, including advanced transaction data analysis, Web3 intern-centric agents, and a range of other promising capabilities.

VectorDB seamlessly integrates with LangChain by providing external context to the LLM in various formats, including text files, CSV/JSON data, and even code from Github repositories. This empowers users to supplement their LLM’s knowledge base with relevant information, leading to more accurate and insightful results.

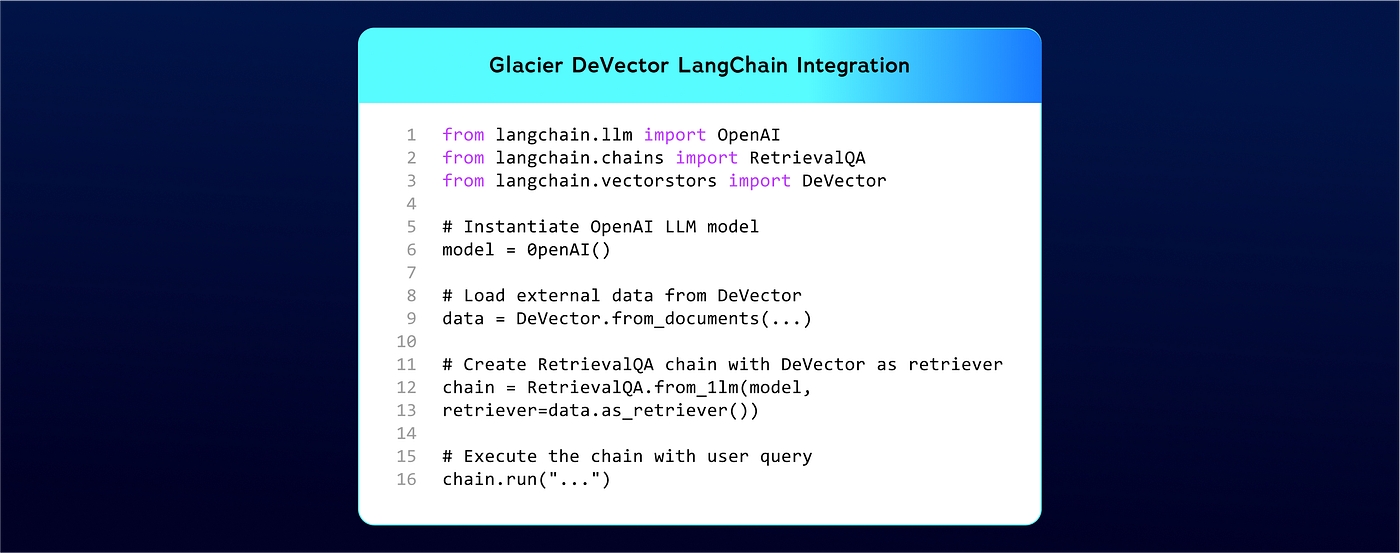

Integrating VectorDB with LangChain is simple and requires just a few lines of code:

Glacier Chatbot-Bench

Leveraging Human Intelligence to Train Machine Intelligence

Chatbot-Bench is a benchmarking product designed to evaluate and compare the performance of large language models (LLMs) in a trustless and decentralized way. Participants engage in anonymized and randomized LLMs battles, contributing to a comprehensive and unbiased rating system, while also earning incentivized rewards. Business partners are allowed to build and feed their datasets to LLMs through Glacier VectorDB, ensuring consistency and reliability in performance evaluations.

Glacier ensures top-tier security with EigenLayer, which upholds native Ethereum-grade protections for your data. Glacier enables all aspects of the data lifecycle to be privacy-preserving, permissionless, verifiable, and decentralized. This includes, but is not limited to, annotations, dataset management, customization, and model assessment. By fostering an ecosystem where data can thrive in a secure and open environment, Glacier is setting new standards in the way we manage and utilize data for AI.

What is Glacier Chatbot-Bench

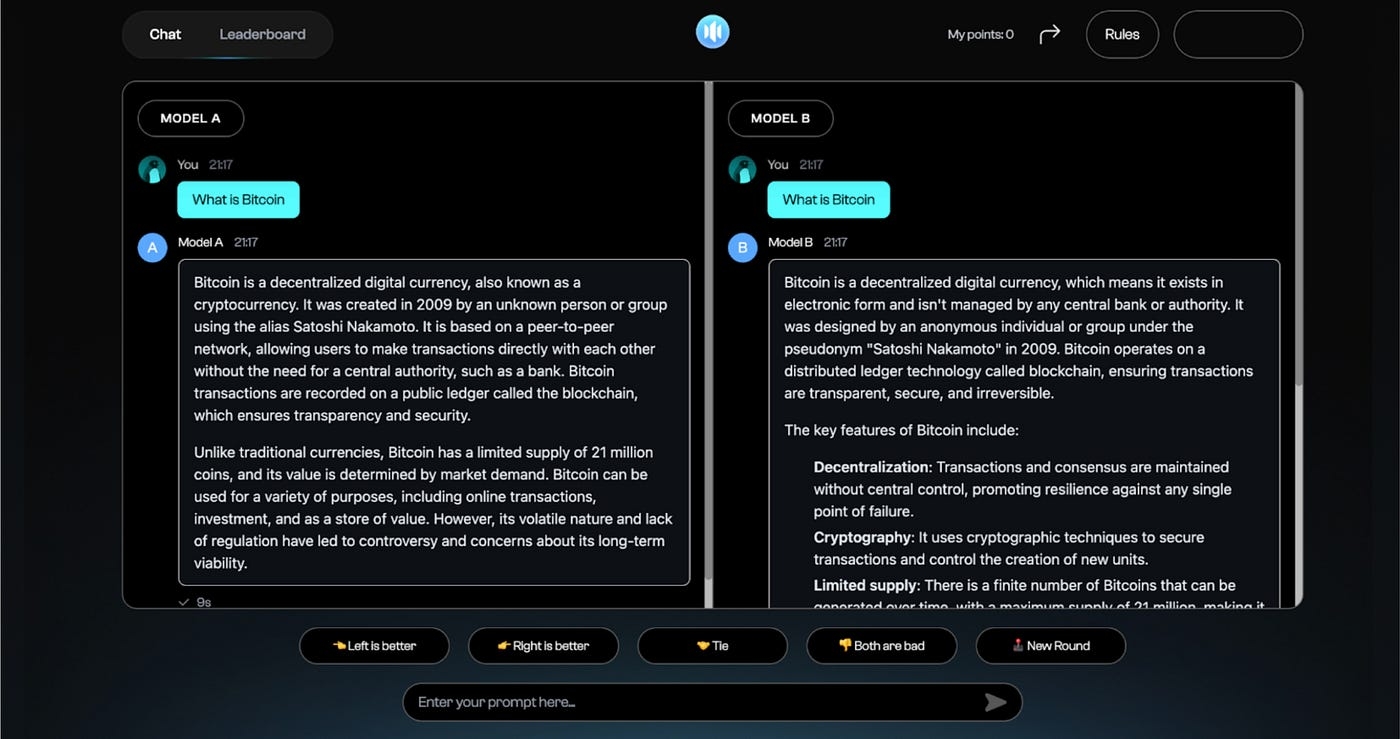

Glacier Chatbot-Bench is a benchmarking product designed to evaluate and compare the performance of large language models (LLMs) in a trustless and decentralized way.

Participants engage in anonymized and randomized LLMs battles, contributing to a comprehensive and unbiased rating system, while also earning incentivized rewards. Business partners are allowed to build and feed their datasets to LLMs through Glacier VectorDB, ensuring consistency and reliability in performance evaluations.

Strategically, Glacier Chatbot-Bench represents a pivotal step toward realizing our broader vision for Glacier Network.

Chatbot-Bench Architecture

At its core, the Chatbot-Bench platform integrates with 44 LLM models⚡️, enabling users to engage in randomized battles to compare model performance. To address the challenges of evaluating open-ended questions, the platform allows Web3 users to conduct human evaluations, rewarding them with incentives for their contributions.

To ensure data privacy and security, GlacierAI-Bench employs Privacy-Preserving Machine Learning (PPML) techniques, creating a secure and open environment where data can be effectively managed and utilized.

Additionally, Chatbot-Bench is integrated with Glacier VectorDB, enabling seamless on-chain and off-chain data queries, making it highly optimized for the Web3 ecosystem. The architecture, powered by advanced AI learning capabilities, is setting a new standard in AI data management and evaluation.

Last updated