AI Industry

AI Boom and Bust

The ‘birth of AI’ emerged during the 1940s cybernetics movement, stemming from the idea that machines and humans might be fundamentally similar.

A question, ‘Can machines think?’, posed by Alan Turing in his paper Computing Machinery and Intelligence, sparked exploration in the field of artificial intelligence. In this work, Turing introduced the concept of the ‘Imitation Game,’ where scientists attempt to differentiate between a machine and a human based on thinking ability. Since that pivotal moment, researchers have been deeply engaged in advancing the field of AI.

The period between the 1940s and 1990s saw the rise of early symbolic AI, natural language processing, and expert systems in finance and medicine. However, AI development experienced multiple boom-and-bust cycles, driven by over-optimism, funding challenges, and technological limitations.

These early decades laid the groundwork for modern AI. The internet boom of the 1990s, combined with the surge of Big Data and advances in computing power, ignited an AI revolution. This convergence enabled artificial neural networks to achieve groundbreaking breakthroughs, propelling AI to unprecedented heights.

In 2017, Google introduced the influential paper Attention is All You Need, which proposed the Transformer — a simple yet revolutionary architecture that uses self-attention to efficiently process text sequences. This innovation led to the emergence of a new era in Generative AI.

As we enter 2024, we are witnessing an unprecedented convergence of AI and crypto, with these technologies intersecting in dynamic ways that are reshaping industries and creating new opportunities.

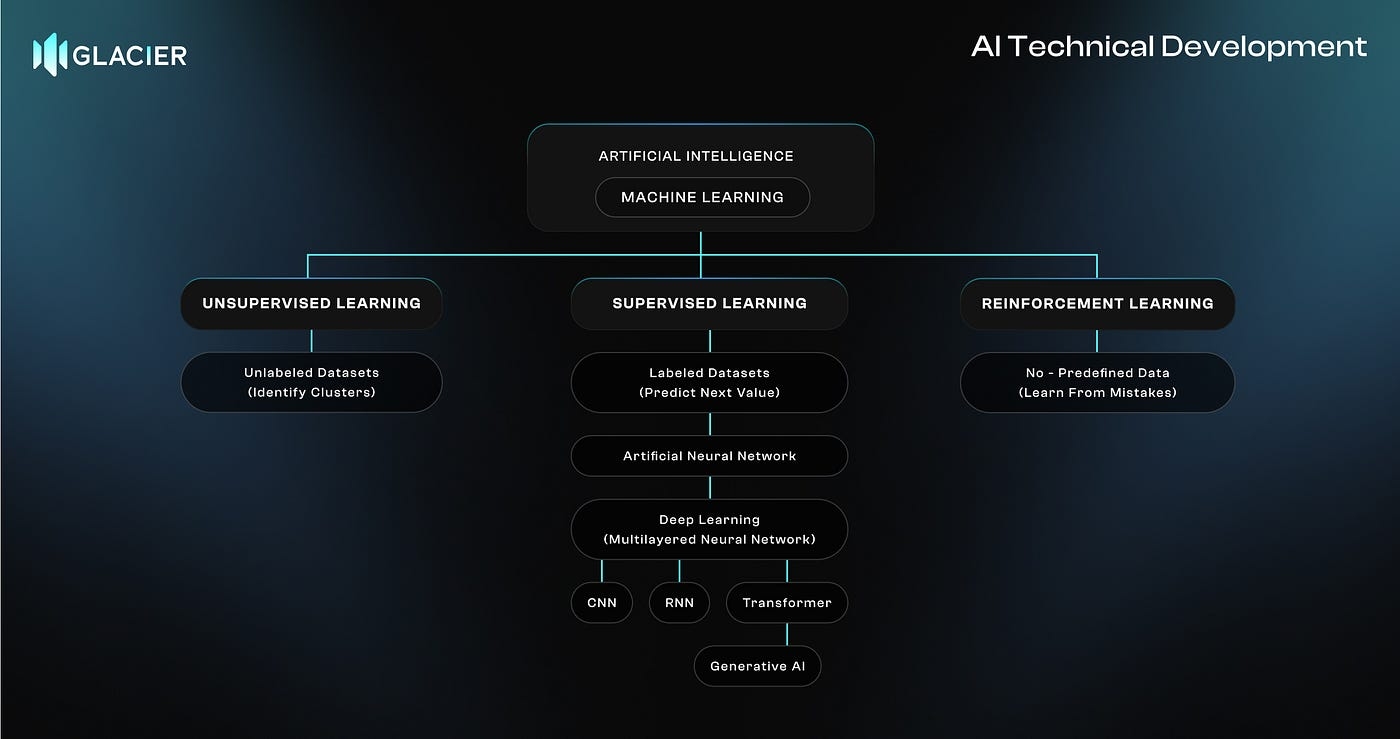

Technical Development

Machine learning (ML) is a subset of artificial intelligence that enables machines to learn from data and improve their performance over time without explicit programming. Before the advent of ML, simpler methods such as pattern matching were employed to search for data based on predefined criteria. The term ‘machine learning’ was first introduced by Arthur Samuel at IBM in 1959, marking a pivotal shift towards more adaptive, data-driven approaches in AI.

Supervised Learning

Supervised learning is the most commonly used form of machine learning, where the model learns from labeled datasets.

The artificial neuron receives input parameters and corresponding output labels (the correct answers). It then learns a mapping by adjusting weights and biases to predict outcomes for new, unseen data. a(1)=(Wa(0)+b)

Unsupervised Learning

Instead of receiving labeled answers from a strict ‘teacher,’ unsupervised learning is more like a baby learning about the world by observing and imitating. This allows the system to utilize vast amounts of freely available data on the internet.

The most basic way to model the world in unsupervised learning is to assume it consists of distinct groups of objects that share similar properties. We can design algorithms to cluster datasets and discover hidden patterns, trends, and relationships.

Reinforcement Learning

Reinforcement learning is useful for acquiring skills that we don’t fully understand ourselves, especially in unseen environments. We provide the AI with feedback on whether it succeeds or fails (a reward-and-punishment paradigm) and then ask it to explain how it achieved the result and the path it followed.

Artificial Neural Network

As the name suggests, artificial neural networks are inspired by the brain. Each network consists of an input layer of neurons, hidden layers, and an output layer. The core function of the neural network is to pass activations from one layer to the next.

The entire network can be described as a function that takes in large inputs, processes them, and produces an output. This process involves massive parameters (in the form of weights and biases) and performs numerous matrix-vector multiplications and other calculations. Once the function is fine-tuned for accuracy, it becomes a powerful model.

Transformer

A Transformer is a specific type of neural network and is the core invention behind the current boom of ChatGPT. It was originally introduced in 2017 by Google in the paper ‘Attention is All You Need.’

When a chatbot needs to generate a word, the input content is broken up into smaller pieces called tokens. Each token is associated with a vector (a list of numbers) that encodes the meaning of that piece. The tokens then pass through an Attention block to understand the context and proceed through a multi-layer perceptron. This process repeats until, at the very end, it produces a probability distribution over all possible words that might come next.

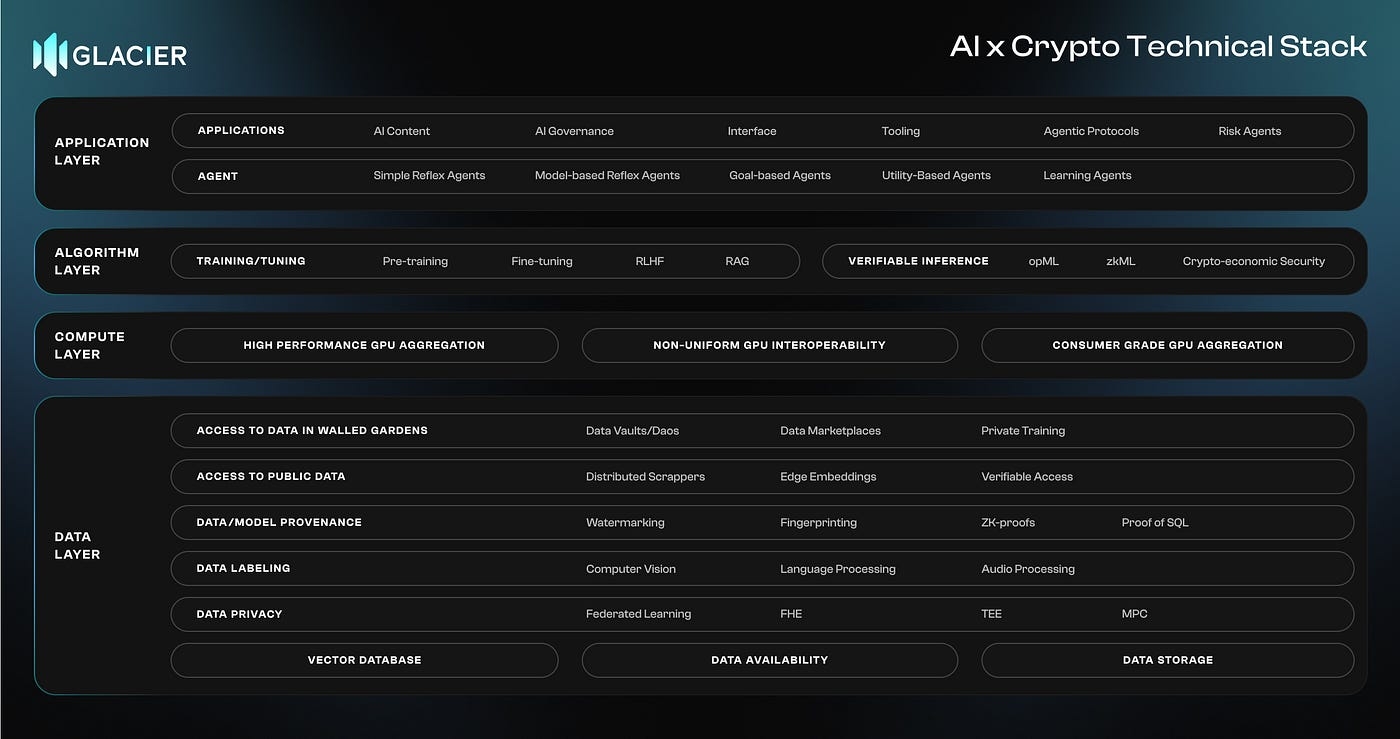

AI x Crypto Technical Stack

The year 2024 marks a pivotal moment in the convergence of AI and crypto technologies, igniting a revolution that has been significantly catalyzed by the insights from Vitalik Buterin’s paper The Promise and Challenges of Crypto + AI Applications.

In the AI-Crypto technical stack, the architecture is built upon four critical layers: the application layer, algorithm layer, compute layer, and data layer. Each of these layers plays a vital role in the seamless interaction between artificial intelligence and blockchain technology, creating a robust foundation for decentralized, intelligent systems.

The application layer serves as the user-facing component, enabling interaction with AI-powered decentralized applications and agents. The algorithm layer underpins the intelligence, where AI models and blockchain consensus mechanisms work together to enhance efficiency, scalability, and security. The compute layer powers the execution, providing the necessary processing capabilities to support AI models and handle blockchain transactions. Finally, we believe the data layer acts as the backbone, ensuring secure, decentralized, and immutable data storage, essential for both training AI models and maintaining blockchain integrity.

The Crucial Role of Data in AI

Data is the bedrock of AI models. It provides the basis for learning, allowing AI to make predictions, recognize patterns, and interpret the world. Without robust data sets, AI systems would merely be complex algorithms without practical application. Just as humans learn from experiences, AI models learn from data. The richness of these data experiences fundamentally shapes an AI’s capabilities. The training phase for AI involves processing extensive data sets to detect patterns and learn from them.

Ongoing Learning and Quality of Data

In real-world applications, AI must continuously adapt by learning from new data. This constant adaptation is crucial for the systems to improve their efficiency and accuracy over time. However, the quality of data is as important as its quantity. Clean and well-structured data significantly enhance AI performance. Data scientists invest considerable efforts in preparing data, ensuring it is optimal for training AI models.

Addressing Data Security and Privacy with Blockchain

AI and machine learning (ML) models require vast data sets for training, which raises concerns about data breaches, tampering, and privacy. Blockchain technology offers solutions to these issues through tamper-proof data storage, enhanced data privacy, and secure data sharing. The inherent transparency of blockchain allows for complete traceability of data provenance and usage, which is vital for training reliable AI/ML models.

Moreover, blockchain facilitates the creation of decentralized data marketplaces, allowing direct interactions between data providers and consumers. This democratization of data access spurs innovation in the AI/ML fields and supports seamless data sharing across platforms, enhancing collaborative R&D and accelerating technological progress.

Glacier Network: Data Layer of AI

As proponents of Web3 and its principles, we recognized the potential to integrate these values into traditional data management, aiming for a system that is unbiased, verifiable, transparent, validated, and open.

Glacier embodies this vision by providing a sovereign AI data infrastructure that meets the expansive needs of AI models. Glacier Network is building a programmable, modular and scalable blockchain infrastructure for agents, models and datasets, supercharging AI at scale.

Last updated